While writing the overview paper of the MIDOG 2022 challenge, we found an additionally reported metric (Average Precision, AP) to be wrongly implemented. This was caused by an (in our interpretation) unexpected behavior of the torchmetrics package, which resulted in only the first 100 detections to be used by default without warning the user about it. This behavior was, meanwhile, after a report by Jonas from our team, fixed. Further, we also had an own coding error in. The primary metric, responsible for the order in the leaderboard (and the challenge) was not affected (and was also properly unit-tested, sigh).

We are really sorry that this error occurred and hope for everyone’s understanding.

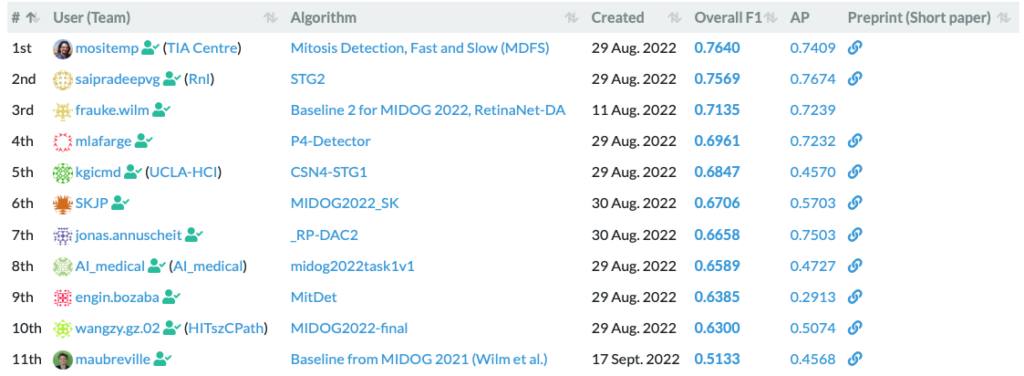

While, as stated, the primary metric (F1 score) of the challenge was not affected by this bug, we know about the importance of the AP metric and updated the leaderboard to rectify the issue. The leaderboard for task 1, showing additionally the AP now is:

The leaderboard for task 2, showing additionally the AP metric now reads as:

These updated metrics will also be incorporated in the soon-to-be-released preprint of the MIDOG2022 challenge report paper.

Leave a Reply

You must be logged in to post a comment.