The dataset is available from multiple sources:

Dataset description

After downloading the dataset, you will have 200 TIFF files (cropped regions of interest from 200 individual tumor cases), and a database. The TIFF files have been converted to standard RGB profiles using the ICC profiles that the scanners provided, where available.

The database is available as sqlite (SlideRunner format) and as json (MS COCO format). For an example of how to interpret this format, please see the notebook below.

The assignment of the scanners to the files is as follows:

001.tiff to 050.tiff: Hamamatsu XR

051.tiff to 100.tiff: Hamamatsu S360 (with 0.5 numerical aperture)

101.tiff to 150.tiff: Aperio ScanScope CS2

151.tiff to 200.tiff: Leica GT450 (only images, no annotations provided for this scanner)

To get a first overview of the data, we recommend our short paper preprint on arxiv:

Quantifying the Scanner-Induced Domain Gap in Mitosis Detection [arXiv.org preprint]

Getting started: The notebook

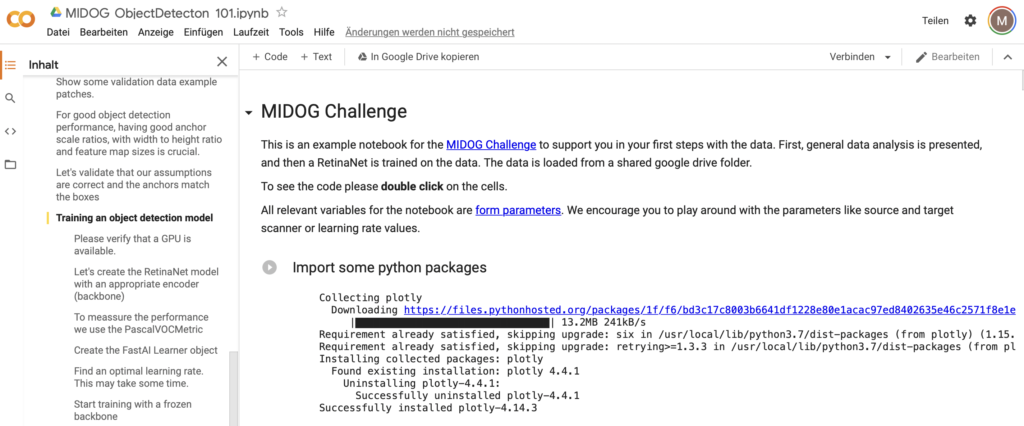

To get you started, there is an explanatory notebook on Google Colab that we really recommend for first steps:

The notebook comprises the following:

- Statistical overview of the data set

- An in-depth view into the dataset

- Training a RetinaNet using the dataset with fast.ai (pytorch)

Dear host,

I think we need detect the hard negatives?I noticed hard negatives in the label file

Short answer is: No!

Long answer: The hard examples are only provided to boost the gradients of your networks. They were a byproduct of our annotation process. So you can use them for sampling, but they should not be detected.

Hello,

I’m having a problem with the “getting started” notebook – there is a function called SlideContainer which is used but never defined, so when running the code it breaks. Could you please advise on what this function is?

Dear Jack,

Thank you for your interest in this challenge.

SlideContainer is imported in the code cell “Install the object detection library”

Can you please try to run all cells by using “Run all” under the menu option “Runtime”?

If you have any further question or the issue persists, please let us know.

With kind regards,

Christian

Hello Christian,

Thanks for your help, I must have been skipping over that cell in execution!

Much Appreciated,

Jack

Hello again Christian!

I was wondering whether there is any way to use v2 of fastai with the object-detection-fastai package? When the package is installed it immediately rolls back fastai to v1.

Thanks,

Jack

Moin,

Sorry at the moment, we don’t support v2 or at least have not tested it.

But the code behind the WSI object detection is open source, so you are welcome to participate in the development. .

https://github.com/ChristianMarzahl/ObjectDetection

Dear organizers,

I was wondering how will the results be evaluated/ranked? Will it be ranked by AP of mitotic figure on the test set?

Thanks,

Jianan

Dear Jianan,

as the threshold is also an important part that can be challenged by domain shift, we settled for detection performance as measured in the F1 score over all slides of the test set (as in: 2*TP/(2*TP+FN+FP)). Of course, we will provide also more in-depth analysis, but this will be the main ranking metric.

Best regards,

Marc

Dear organizers,

As mentioned on the website, We need to submit a docker container. But I haven’t had any experience in working with a docker. Could you please help me with how can I submit the result of my trained model as a docker?

Also, where should I submit it?

Thanks,

Ramin

Dear Ramin,

we’re at the moment busy preparing an example docker container for you and all other participants to use as a template. Since we want to make sure that everything is smooth, we’re awaiting the completion of the test set until we publish the container examples and documentation, to run some final checks.

We will also publish a detailed description about how to submit the container and where to see your preliminary results. But while we’re not ready yet, expect everything to be there on time to integrate it without a hurry.

Best regards,

Marc

Dear organizers,

I am wondering whether there is no mitotic figure in some test cases. I have noticed this kind of case in the train set.

Best regards,

Amanda

Dear Amanda,

unfortunately, I can’t reveal if there are cases within the test set that do not contain any mitotic figures. There’s certainly the possibility for this, due to the way the data set was created. It’s a consecutive selection of cases from the diagnostic archive, and the split was random. So there can be low grade tumor cases where the ROI just does not contain any mitotic figures, however, rather rarely.

However, just to clarify one aspect of the data set: The cases 150 to 200 have no mitotic figure annotations, because the slides are just provided to provide some data for more image variability. These cases contain mitotic figures, however (just no annotation data).

Best,

Marc

Dear organizers,

I have two questions.

(1) Output format

What is the format of the output file from the Docker container ? Is it similar format as the JSON file provided in the training dataset ? Would you let us know the details of the format ?

(2) Evaluation metrics

I understand that the main metric for the evaluation is F1 score (as mentioned in the above post). How do you define TP ? Since it is a detection task and I think we should detect “rectangles” enclosing mitosis cells. Do you specify a threshold for IoU between GT rectangles and predicted rectangles ?

Regards,

Satoshi

Dear Satoshi,

thanks for getting in touch. We will soon let you know the exact details of how the results are expected. For this, we will provide a template docker container (using a baseline model) and documentation, so you can have a good understanding of how exactly we expect the results. Give us a few more days until we completed all checks and tested also the integration into the test framework, but we’re almost there. 🙂

W.r.t. your second comment: The detection goal is not rectangles but actually the center of the cell (which can, of course, be derived from the rectangle). We count everything as true positive that has a smaller euclidean distance than 7.5 microns (approx 30 px) from a ground truth annotation.

Hope that clarifies things! Best regards,

Marc

Dear Organizers,

As a follow-up on your last response, I notice you mention your True Positive criteria being “We count everything as true positive that has a smaller euclidean distance than 7.5 microns (approx 30 px) from a ground truth annotation.”

Can you clarify how you account for potential multiple detections around a single ground truth location? You answer implies that any detections (potentially more than 1) will be counted as a true positive as long as it is within the distance threshold. Or, will multiple-detected mitotes be counted as false positives beyond the first detection?

Thank you for the clarification,

Kind regards,

Rutger

Dear Rutger,

thats a very relevant question. In fact, we discussed quite a bit about it when we drafted the challenge description and then stated:

“The data set does not contain multiple annotations for the same mitotic figure. We expect participants to also run

non-maximum suppression to ensure a mitotic figure object is only detected once. We will count detections

matching an annotation only once as true positive, multiple annotations thus lead to false positives.” (on page 13)

So yes, multiply detected mitoses will be counted as false positives beyond the first detection.

Best regards,

Marc

Dear Organizers,

I noticed that you said, “The detection goal is not rectangles but actually the center of the cell”, so can I make the task a point detection task rather than a bounding box detection task?

Yes, in fact, you can do that 🙂 detection goal is centers of mitoses. If you reach that via object / bounding box / instance segmentation / point detection is completely up to you!

Best regards

Marc

i have a very basic question, typically which is searched is that “bbox” : [x,y,width,height] but i think its x,width,y,height. can you please correct me

i have a very basic question, typically coco dataset which I searched is that “bbox” : [x,y,width,height] but i think in this dataset it is x,width,y,height. can you please correct me

So in fact we’re using [x1,y1,x2,y2] which is the same as [x,y,x+w,y+h]. Not sure if this is different to MS COCO, but it’s not uncommon … 🙂

Hi, I cannot verify my email successfully when submitting my first contrainer. It shows that I have an request ongoing. Actually, I have received an verification email and have clicked the link. But I still cannot verify successfully.’Your verification request is under review by the site administrators.’

I feel so anxious about the problem, hope it can be solved soon. Thanks a lot!

Dear Sen Yang, please contact support@grand-challenge.org with this problem. I am sure they can help you!

Ok,To be honest, this was the most unpleasant time to participate in the competition. It was a great blow to the enthusiasm and enthusiasm of the people. I contacted the platform and no one returned me. The platform rejected my verification and said that I have multiple accounts, but I only have one account to participate. Contest, don’t let me submit

Dear Sen Yang,

I am sorry that this happened to you. Please have in mind that there might have been a misunderstanding. In general, the people at grand-challenge are really helpful and try their best. It is the first time that they are doing a container-based operation and of course there is a lot to learn.

On the other hand, please see that none of us it getting a single dollar for this effort. We‘re doing this completely as a service to the community and it involves a lot of work. Also have in mind that we might be located in another time zone and our office hours are strongly dislike yours.

So, sorry again if you had an unpleasant experience. Maybe if you give it another try and ask politely it will all work out fine.

Best regards

Marc

Dear Marc,

Thank you very much. I have contacted them. They said that I registered two accounts on the platform a long time ago, so now I have blocked all my previous accounts. I can’t even log on the platform now. Registered, I haven’t submitted it once, and I haven’t cheated, so now I don’t even have an account. Can you help me?

Thanks

Sen

Dear Marc,

Thank you very much. I have contacted them. They said that I registered two accounts on the platform a long time ago, so now he have blocked all my previous accounts. I can’t even log on the platform now. Registered, I haven’t submitted it once, and I haven’t cheated, so now I don’t even have an account. Can you help me?

Thanks

Sen

‘This account is inactive.’

Dear Organizers,

Dear Organizers,

Hi,

I am a student coming from Tsinghua University studying in medical image. I have to say that MIDOG is so great for our community and thanks for all of your countless efforts. The huge amout data, annotations and submitting docker is so amazing.

However, my grandChallenge account is inactivate this morning. I do not know what happened. I have spent a lot time on the competion, so I am a little anxious and worried. I would appreciate it if you could tell me the reason. My grand challenge account is 937586119@qq.com. Thanks for all of your efforts again.

BEST WISHES!

Amanda

Dear organizer,

Thanks for all efforts you made that make me immersed in the cehllenge these days. Whatever the final result is, I fall in love with mitosis detection.

However, i think the challenge is a little unfair in the case that a team with x persons have x permissions one day while only one submission for single participant. And from the leaderboard, we can see that some teams have several submission one day with the very close F1 and the same recall. It seems that their submissions use the same code with different thresholds.

This is the screenshot. https://drive.google.com/file/d/1ANZOfwkJv_5E5JZ2OtFbJQg0d2xVgs8q/view?usp=sharing

Hope every participate and team can be equally treated. Finally, thanks for all what you done again for the community.

BEST WISHES!

Amanda

Dear Amanda,

Thank you for your positive feedback! We are happy to hear that we have been able to make you fall in love with this topic!

Thank you also for your second point of feedback. We have discussed the issue with repeated submissions per team already internally and reference it also in a blog post. We are giving different participants in a team the benefit of the doubt with the assumption that they are submitting substantially different approaches (as also required for the final submission). This of course does not apply to cases of obvious misconduct.

We will further evaluate this point in our follow-up discussions of the challenge.

Let me shortly mention that the preliminary test set is not provided as a means to optimize your model on the test set, but to assert the functionality of the model on this new data. At the same time, competitions thrive and have the potential to really help in the discovery of new and interesting approaches if participants adhere to fairness and sound scientific practice.

Kind regards,

Katharina

Dear Amanda,

I don’t agree with your point of view. Like other competition platforms, kaggle, a team shares the number of submissions. Why give members of a team the opportunity to submit multiple submissions? They are originally a solution. This is a serious unfairness to other teams. Like many teams with more than 5 people, they should only have one chance to submit. If this is the case, I can register multiple accounts and submit different solutions. In short, this does not comply with the rules of the MICCAI competition at all, and at the same time it is not a convincing competition.

Thanks

Sen

Dear Katharina,

I don’t agree with your point of view. Like other competition platforms, kaggle, a team shares the number of submissions. Why give members of a team the opportunity to submit multiple submissions? They are originally a solution. This is a serious unfairness to other teams. Like many teams with more than 5 people, they should only have one chance to submit. If this is the case, I can register multiple accounts and submit different solutions. In short, this does not comply with the rules of the MICCAI competition at all, and at the same time it is not a convincing competition.

Thanks

Sen

Dear Sen,

Thank you for your comment. Our notion comes from the following idea: From our point of view, the main value behind the challenge is to look at the problem of domain shift for Mitosis detection and learn how we can tackle it with different approaches – the ranking on the leaderboard is meant as an additional motivating factor but should not be the only outcome. This justified the request of two groups from the same lab with two different ideas, with a subset of people who are members in each group. We wanted to give them the opportunity to submit these two fundamentally different approaches. We will re-evaluate this policy after the challenge, again discuss its pros and cons, including the points that you have mentioned, and integrate this in future challenge designs.

Thank you,

Katharina

Dear Katharina,

I have two questions about the final submission.

1.Regarding the final abstract, it must be arxiv or medrxiv.org, can I upload it to my own github?

2.Regarding the number of submissions, the same team can only submit once, and the same team cannot submit multiple times. It cannot be like a Preliminary Test Phase.

Thanks

Sen

Hi Sen,

Short request – kindly post your comment also to the matching blog post – that makes it also easier for other participants who have the same questions.

Regarding your questions:

1. We strongly discourage participants to submit anything else than an arxiv (or medRxiv/bioRxiv) link

2. If multiple approaches are submitted by members of the same team, they have to be substantially different as described in their abstract and assessed by peer review. We will not accept two submissions that link to the same abstract, algorithm or which are fundamentally the same method. Here I kindly refer you to my previous comment.

Kind regards,

Katharina